We build production-grade ML infrastructure and AI algorithmic solutions for large scale recommendation systems that serve billions of users producing big data (e.g. >1B requests per day).

Nowadays in order to navigate the vast amounts of content on the internet, users either rely on search queries, or on content recommendations powered by algorithms.

Our Recsys team focuses on building state-of-the-art ML algorithms while pushing the research boundaries in recommender systems to new frontiers while designing and deploying scalable cutting-edge, production-grade ML infrastructure pipelines for fast and easy ML offline/online research and experimentation.

Selected Projects

Incremental Learning for Large-scale CTR Prediction

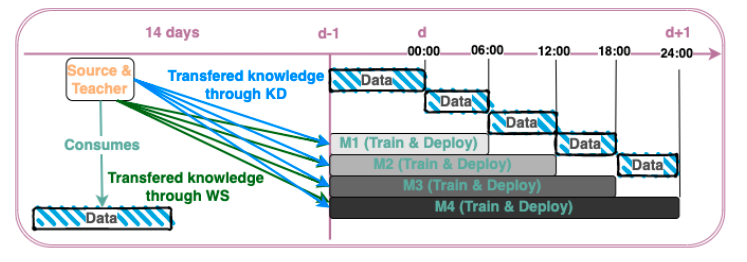

An Incremental Learning framework for Large-scale CTR Prediction applied in Taboola's massive-scale recommendation service. Our approach enables rapid capture of emerging trends through warm-starting from previously deployed models and fine tuning on "fresh" data only. Past knowledge is maintained via a teacher-student paradigm, where the teacher acts as a distillation technique, mitigating the catastrophic forgetting phenomenon. Our incremental learning framework enables significantly faster training and deployment cycles (x12 speedup) as also brings consistent Revenue Per Mille (RPM) lift over multiple traffic segments and a significant CTR increase on newly introduced items.

Exploration vs Exploitation and uncertainty estimations

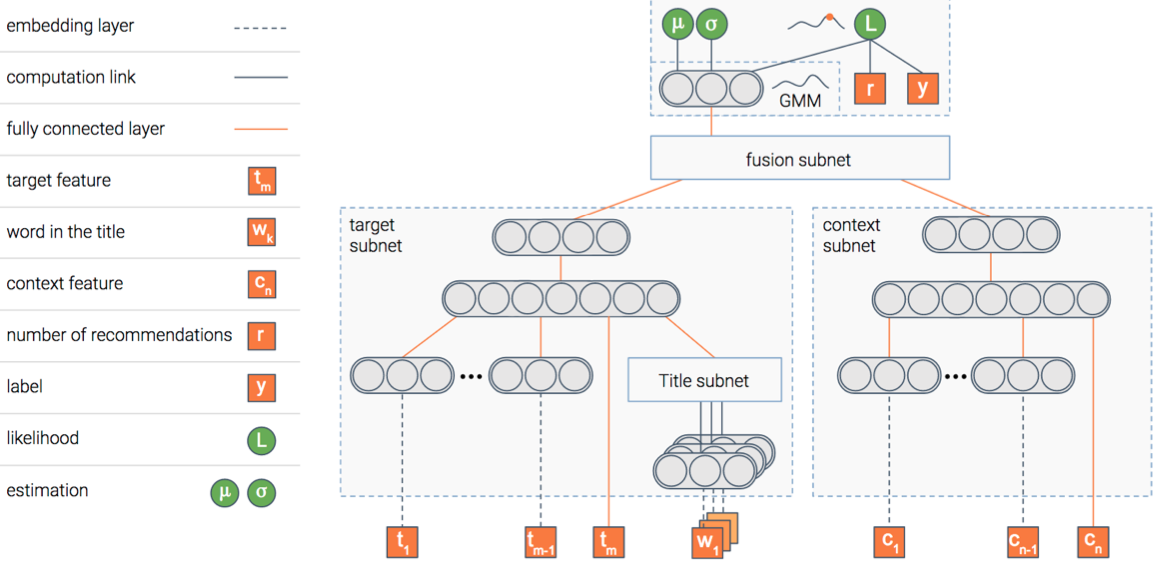

Building robust online content recommendation systems requires learning complex interactions between user preferences and content features. Despite progress, the dynamic nature of online recommendations still poses great challenges, such as finding the delicate balance between exploration and exploitation. In this project we utilize uncertainty estimations employing them as an optimistic exploitation/exploration strategy for more efficient exploration of new recommendations. We provide a novel hybrid deep neural network model, Deep Density Networks (DDN), which integrates content-based deep learning models with a collaborative scheme that is able to robustly model and estimate uncertainty.

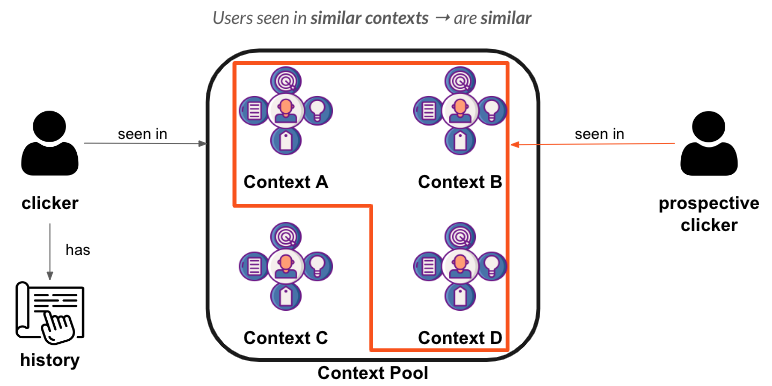

Self-Supervised Learning between users and context

Neural architecture-based recommender systems have achieved tremendous success in recent years. However, when dealing with highly sparse data, they still fall short of expectation. Self-supervised learning (SSL), as an emerging technique to learn with unlabeled data, recently has drawn considerable attention in many fields. There is also a growing body of research proceeding towards applying SSL to recommendations for mitigating the data sparsity issue. In this project we are incorporating SSL to learn rich representations for both the users and the context. We are incorporating a two-tower network architecture where the first tower consists of features related to the user and the second features related to the context and utilize a contrastive loss to train the two-tower network.

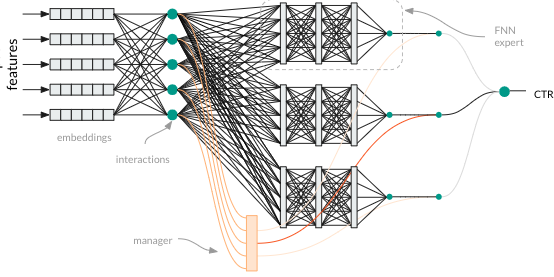

Mixture of Experts in Recommender Systems

Neural-based learning has been successfully used in many real-world large-scale applications such as recommendation systems. Furthermore, Mixture-of-experts (MoE), a type of conditional computation where parts of the network are activated on a per-example basis, has been proposed as a way of dramatically increasing model capacity without a proportional increase in computation. In this project we are combining both while employing MoE type of DL models for CTR & CVR estimation while incorporating prior knowledge to bootstrap manager’s routing to the experts. In addition a load balancing scheme is being employed as regularization avoiding the collapse phenomenon.

Knowledge Distillation for Transfer Learning

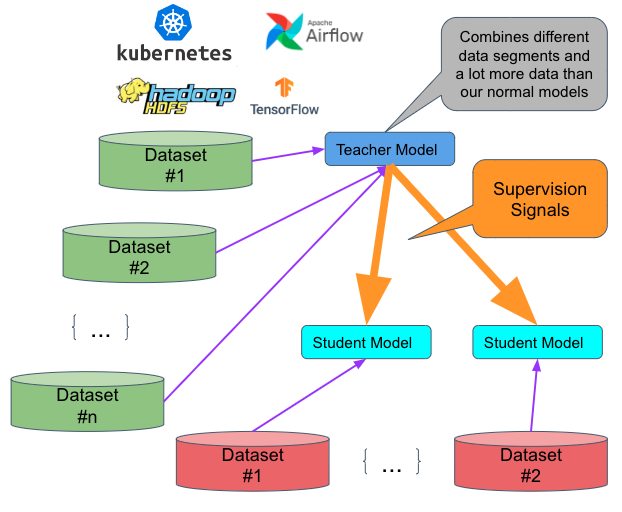

Recent KD-based methods are developed not only for model compression but also in order to produce more accurate models and are mainly applied in public datasets with state-of-the-art results. In this project we propose a novel framework for both cross-data and temporal Knowledge Distillation, the “xt-KD”, which was applied in large-scale production systems to account for the major challenges faced while serving billions of requests per day. The xt-KD is a transfer-learning inspired approach building on Knowledge Distillation (KD), extending and re-formulating it in an efficient manner, while being adapted for the exploitation of huge data so as to leverage both past accumulated knowledge as well as diverse data-partitions termed after “cross-data”. With xt-KD we transfer knowledge between different cohorts of our data corpus, reuse and distill knowledge from previous past-deployed models into new ones, and overall apply several different scenarios and ablations on cross data and temporal transfer knowledge setups.

Knowledge Distillation: Cross Data and Temporal Knowledge Distillation for improved Click-Through-Rate

In this talk we present a novel transfer learning framework for both across datasets’ and temporal Knowledge Distillation, termed as “cross-temporal-KD”, xt-KD for short, motivated by challenges in large scale production systems, while building upon the Student-Teacher (ST) in order to address the main challenges during recommendation systems’ development, training and inference cycle: such a method has to satisfy all the performance requirements for fast inference and efficient training times.

Our solution exploits supplementary knowledge across diverse datasets to transfer it through the teacher model. In other words we also transfer knowledge from different segments of our data corpus by training the teacher model on both larger and different datasets than the student, being a superset of the student’s data. Additionally, in order to take advantage of already trained models, instead of training from scratch new models again and again, we reuse them as teachers. these are the ones trained on previous timestamps. With such a simple yet elegant and profound methodological setup and the corresponding experimental production pipeline we are able to transfer knowledge both across time and across different datasets in a model agnostic way building upon the ST paradigm.

Petros Katsileros

Team Lead