In order for AI to tackle real-world problems and demonstrate generalization, we need to build "understanding" over them. We work and ground our approaches with real-life data in explainable ways.

Our world is characterised by an enormous visual variability and diversity. Yet, humans have an incredible ability to not only focus on the relevant and useful information but also to translate it to high-level concepts necessary for reasoning and decision making.

Our Vision and Language team focuses on building state of the art systems that learn to fuse visual information with linguistic common sense in order to achieve human-level/inspired understanding of visual scenes. We aim on learning to accurately recognise how objects interact, what is the common sense aspect behind these interactions, answer questions on images in an explainable manner as well as to provide correct reasoning explanations.

Projects

Learning the Spatial Common Sense of Relations

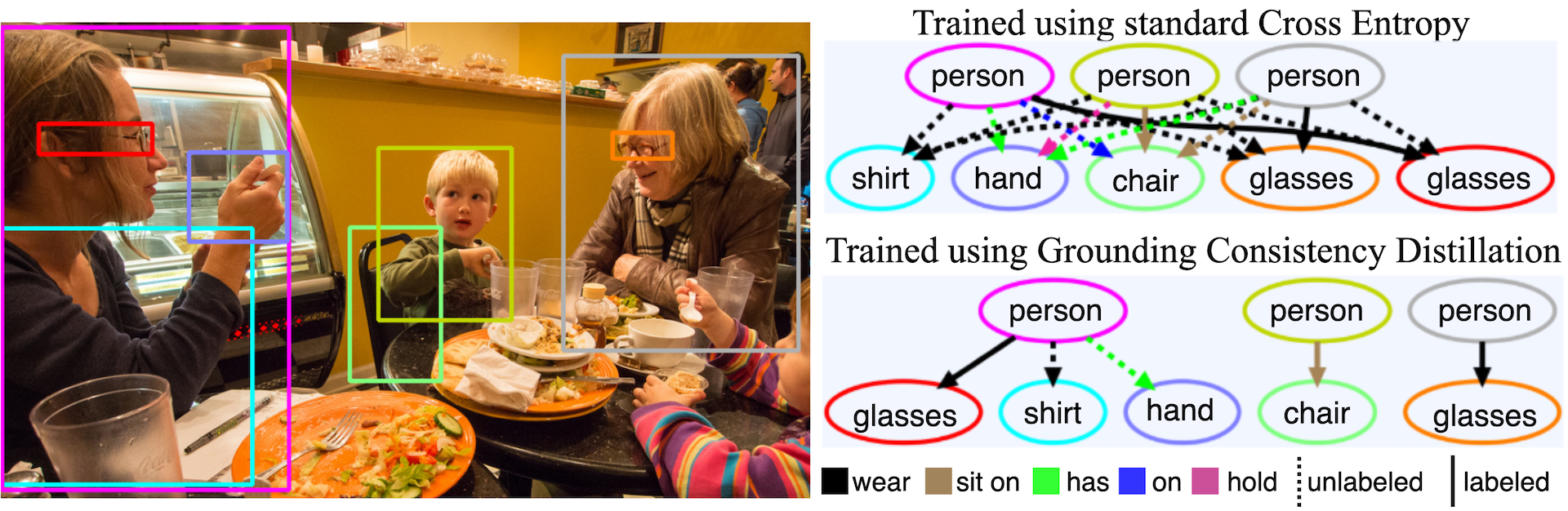

Due to imbalanced training data, scene graph generation models have the tendency to predict relations only based on what objects interact and not on how. As an example, a person will be predicted to sit on a chair no matter their spatial positioning. Our method mitigates this important flaw by enforcing grounding consistency between two inverse problems namely relationship detection and grounding.

Weakly Supervised Visual Relationship Detection

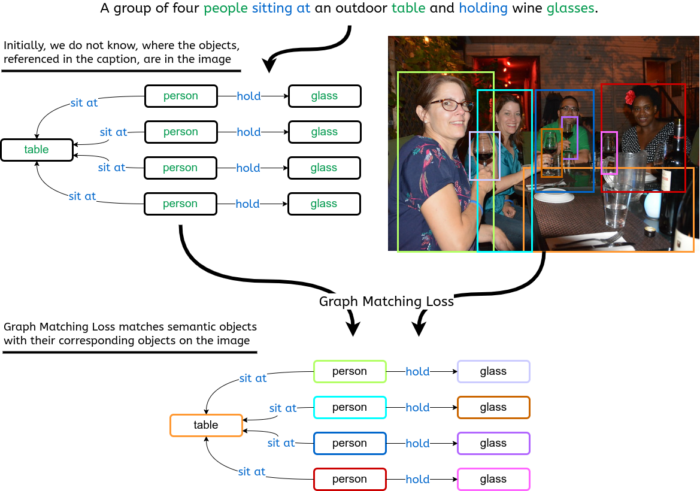

Similarly to humans, high-level representations of images as relation graphs should be able to distinguish between salient and non-important information in an image. Nevertheless, current datasets do not provide this information. We tackle this important challenge using image-caption pairs as weak annotations for learning dense object relations as well as their importance.

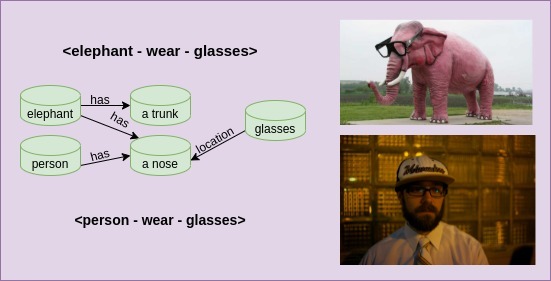

Recognising Unseen Relations using External Knowledge

Datasets providing relation annotations span only a small subset of plausible relation combinations. For example an elephant wearing glasses is almost unlikely to be seen during model training but human common sense dictates that it is certainly plausible. How can we learn the common sense behind relations and enable detection of previously unseen combinations of objects?

Explainable Visual Commonsense Reasoning

Our area of interest is Visual Commonsense Reasoning with a focus on interpretability and explainability. Given a set of movie scenes and corresponding questions our task is to choose not only the correct answer but also provide a plausible explanation.

Explainable Visual Commonsense Reasoning

Given a set of movie scenes and corresponding questions our task is to choose not only the correct answer but also provide a plausible explanation. This task requires a combination of linguistic common sense (e.g. an office generally contains computers) as well as visual grounding capabilities (e.g. locating the computer in order to deduce where a scene takes place).

We are building model architectures that have an inherent interpretable architectural design. We are able to visualise where exactly a model "looks" in order to give a specific answer (see figure on the right). This enables us not only to understand why our models make certain predictions, but also to use those interpretability variables as part of our training objective.

The boy is climbing all over the man.

He is turned away from him, and focused on his cigarette.

Mary Parelli

Weakly Supervised Visual Relationship Detection

Our approach discards annotated graphs and just uses image captions, which are inherently salient, to extract semantic scene graphs and weakly train our models using a Graph Matching Loss function. The benefits of this approach are twofold. First, we are able to scale our training data to the available large scale image-text datasets. Second, our models learn to give priority to the image relationships that would be most meaningful to a human.

*Thesis project co-supervised by Prof. Petros Maragos @NTUA

Alexandros Benetatos